You can always safely bet that you will have to take time to make sure that the data in your dataset is “cleaned”.

But what is it meant to have “clean data”? No, you can’t take some Windex, spray it on your computer screen, and say adiós to the bad data (but dang, that’d be nice!).

Data cleaning is a process of removing errors, outliers, and inconsistencies so that your data is in a format that is appropriate for your analysis.

Table of Contents

What is “Dirty Data”?

Dirty data is data that doesn’t fit your needs for your analysis. There’s a number of different kinds of dirty data, such as missing values, redundant values, outliers, incorrect data types, inconsistencies, and so much more.

We can further expand on inconsistencies - it’s data that may have different date formats (YYYY-mm-dd vs dd/mm/yyyy vs mm/dd/yyyy), casing (New York vs new york) or even measurements (kg vs freedom units 🇺🇸).

In order to clean your data, you’ll need to understand your dataset and set a few rules as you’re cleaning it following a framework that I totally did not create (just kidding - I did).

Framework for cleaning your data: CLEAR

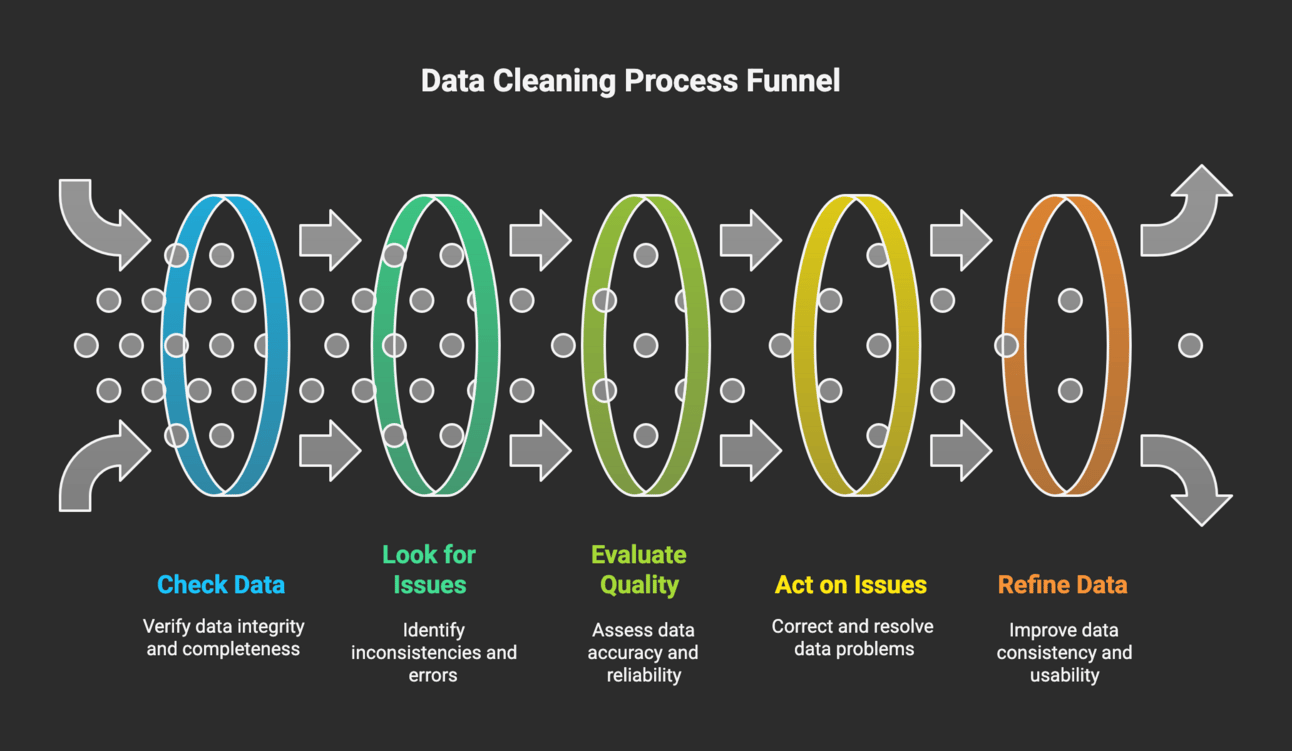

Whenever I am trying to clean datasets, I like to follow a framework called CLEAR: Check, Look, Evaluate, Act, Refine.

This provides a structured, step-by-step approach to ensure that the data is accurate, consistent, and ready for analysis. Each phase in this framework builds on the previous step, which allows you to systematically identify and resolve data quality issues.

1. Check

First, we want to check for common issues such as missing values, duplicates, or outliers. In addition, we want to examine our data for statistics and any potential categories (assuming df is our dataframe):

df.info() # General info

df.describe() # Statistics

df.head(10) # Peek @ top-most data

df.isnull().sum() # Any null values in columns?

df.duplicated() # are there duplicate rows?

df.nunique() # number of unique values

df.dtypes # What data types?

df['column'].value_counts() # frequency of unique values

df.corr() # Check for any correlationsThere’s much more we can do, such as checking for data length consistency, data ranges, zero variance columns, unwanted symbols in strings, checking for negative numbers, etc. It’s all dependent upon how you want your data to be cleaned.

2. Look at your data

Plot your data somehow! Seaborn and matplotlib both make it easy to plot your data. I suggest plotting:

Heat maps to check for missing data and correlations

Histograms to check data distribution

Box plots to spot outliers and distributions across multiple categories

Line/Scatter plots for spotting trends, clusters, and outliers

Take the below sample script for some plots:

import seaborn as sns

import matplotlib.pyplot as plt

# Heat map: correlation plot

sns.heatmap(df.corr(), annot=True, cmap='coolwarm')

# Heat map: missing data

sns.heatmap(df.isnull(), cbar=False, cmap='viridis')

# Histogram

df['numerical_column'].hist(bins=20)

# Box and Whisker

sns.boxplot(x='category', y='numerical', data=df)

# Scatter plot

plt.scatter(df['col1'], df['col2'])Hot take: you don’t understand your data until you can visualize it.

3. Evaluate your data

Once we get to this part of the framework, we want to:

Understand how and why the issues found in the previous 2 steps exists

Establish rules to implement cleaning the data to address questions posed

Specifically, you’re going to want to determine what should be fixed, what should be removed, and what should be retained but transformed and why you should implement these steps.

You likely won’t be writing any code here, as you’ll be developing a strategy as to how you’ll fix the data.

For example, when we are evaluating missing data we want to ask ourselves important questions such as “is the missing data random, or does it follow a pattern?", “How much missing data is there, and does it affect critical columns”?

Similarly, for duplicate data, we may want to ask ourselves something along the lines of “how widespread are these duplicates?”, or “are these accidental data entry errors?”

4. Act on the data

Then, we want to act on cleaning the data. Here, put all of the insights from all of the previous phases of the framework and implement the rules and strategies to clean and transform your data.

The goal here is to ensure that the data is complete, accurate, and prepared by methodically implementing the decisions you’ve made in the previous step.

Let’s say that during our check we came across a few outliers in our data. We detected this in the check phase by df.describe() and in our graphs with a box plot. After looking into it further, we decided that these outliers were simply bad inputs.

Our strategy that we selected to filter our outliers is by removing data with z-scores less than -3 and above 3:

from scipy import stats

df['z_score'] = stats.zscore(df['column_name'])

outliers = df[(df['z_score'] > 3) | (df['z_score'] < -3)]» If you don’t know what z-scores are, check out this article on Statistics How To.

5. Refine your data

Once you’ve written code to act on the data, you then can enter the refine phase, which has 3 goals:

Revalidate your data so that it’s now accurate, consistent, and aligned with the rules you set in the evaluate phase.

Fine-tune any remaining issues or edge cases that weren’t fully resolved in the initial cleaning.

Prepare for analysis, modeling, and/or reporting.

One simple way to get started is to re-run the commands from the check phase, such as df.describe() and df.info().

You’ll also want to re-create those various plots: histograms, box plots, scatter plots, and heat maps (bonus points if you put pre-clean and post-clean graphs side-by-side so you can clearly see the improvement).

There’s a few more ways to refine your data:

Perform another null check: df.isnull().sum()

Values are in a specified range: df[(df['col'] < val1) | (df['col'] > val2)]

Detecting outliers: df.loc[df['col'] > threshold, 'col'] = threshold

Once you’ve successfully verified your data has been cleaned, give yourself a pat on the back (you deserve it).

Other Python packages to use for data cleaning

In addition to (or in lieu of) Pandas, you may want to consider the following packages to help with data cleaning:

Happy cleaning!

📧 Join the Python Snacks Newsletter! 🐍

Want even more Python-related content that’s useful? Here’s 3 reasons why you should subscribe the Python Snacks newsletter:

Get Ahead in Python with bite-sized Python tips and tricks delivered straight to your inbox, like the one above.

Exclusive Subscriber Perks: Receive a curated selection of up to 6 high-impact Python resources, tips, and exclusive insights with each email.

Get Smarter with Python in under 5 minutes. Your next Python breakthrough could just an email away.

You can unsubscribe at any time.

Interested in starting a newsletter or a blog?

Do you have a wealth of knowledge and insights to share with the world? Starting your own newsletter or blog is an excellent way to establish yourself as an authority in your field, connect with a like-minded community, and open up new opportunities.

If TikTok, Twitter, Facebook, or other social media platforms were to get banned, you’d lose all your followers. This is why you should start a newsletter: you own your audience.

This article may contain affiliate links. Affiliate links come at no cost to you and support the costs of this blog. Should you purchase a product/service from an affiliate link, it will come at no additional cost to you.